The Real Cost of Chasing AGI: Power Consolidation and Societal Risks

The Real Cost of Chasing AGI: Power Consolidation and Societal Risks

The race to achieve Artificial General Intelligence (AGI) is on, with tech giants and research labs pouring billions into the effort. But amidst the excitement and potential benefits, a critical question looms: What's the real cost of chasing AGI? The AI Now Institute, in their "Artificial Power" report, argues that power consolidation is just the beginning of a series of societal and environmental risks we need to address.

The AI Now Institute's Warning: Artificial Power

The AI Now Institute's "Artificial Power" report highlights the growing concentration of power in the hands of a few companies driving AGI development. This consolidation isn't just about market dominance; it's about the potential for these companies to shape the future of AI in ways that benefit them, potentially at the expense of broader societal well-being. The report argues that the current trajectory of AI development is not inevitable and that public agency is needed to reclaim control over the future of AI.

Risks of Unchecked AGI Development

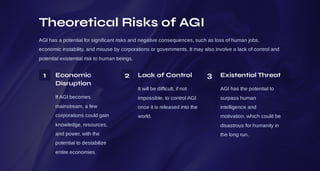

The unchecked pursuit of AGI carries several significant risks:

- Power Consolidation: A few powerful companies control the resources, talent, and infrastructure needed for AGI development, leading to a concentration of power and influence.

- Environmental Impact: Training large AI models requires massive amounts of energy, contributing to carbon emissions and environmental degradation. The "Artificial Power" report emphasizes the often-overlooked environmental costs of AI development.

- Societal Bias: AI systems can perpetuate and amplify existing societal biases if the data they are trained on reflects those biases. This can lead to unfair or discriminatory outcomes in areas like hiring, lending, and criminal justice.

- Lack of Accountability: The complexity of AGI systems makes it difficult to understand how they work and hold them accountable for their actions. This lack of transparency can erode public trust and make it harder to address potential harms.

What's Next? Key Takeaways

The AI Now Institute's report serves as a wake-up call, urging us to consider the broader implications of AGI development. We need to move beyond the hype and engage in a serious conversation about how to ensure that AI benefits everyone, not just a select few. Key takeaways include:

- Promote Transparency and Accountability: Develop mechanisms for understanding and auditing AI systems to ensure they are fair, unbiased, and accountable.

- Invest in Public Research: Support independent research on AI safety, ethics, and societal impact to counter the influence of private companies.

- Regulate AI Development: Implement regulations to address the environmental impact of AI and prevent the concentration of power in the hands of a few companies.

- Foster Public Dialogue: Engage the public in a broad conversation about the future of AI and how to ensure it aligns with our values.