Master SaaS AI Risk: Your Complete Governance Playbook

Master SaaS AI Risk: Your Complete Governance Playbook

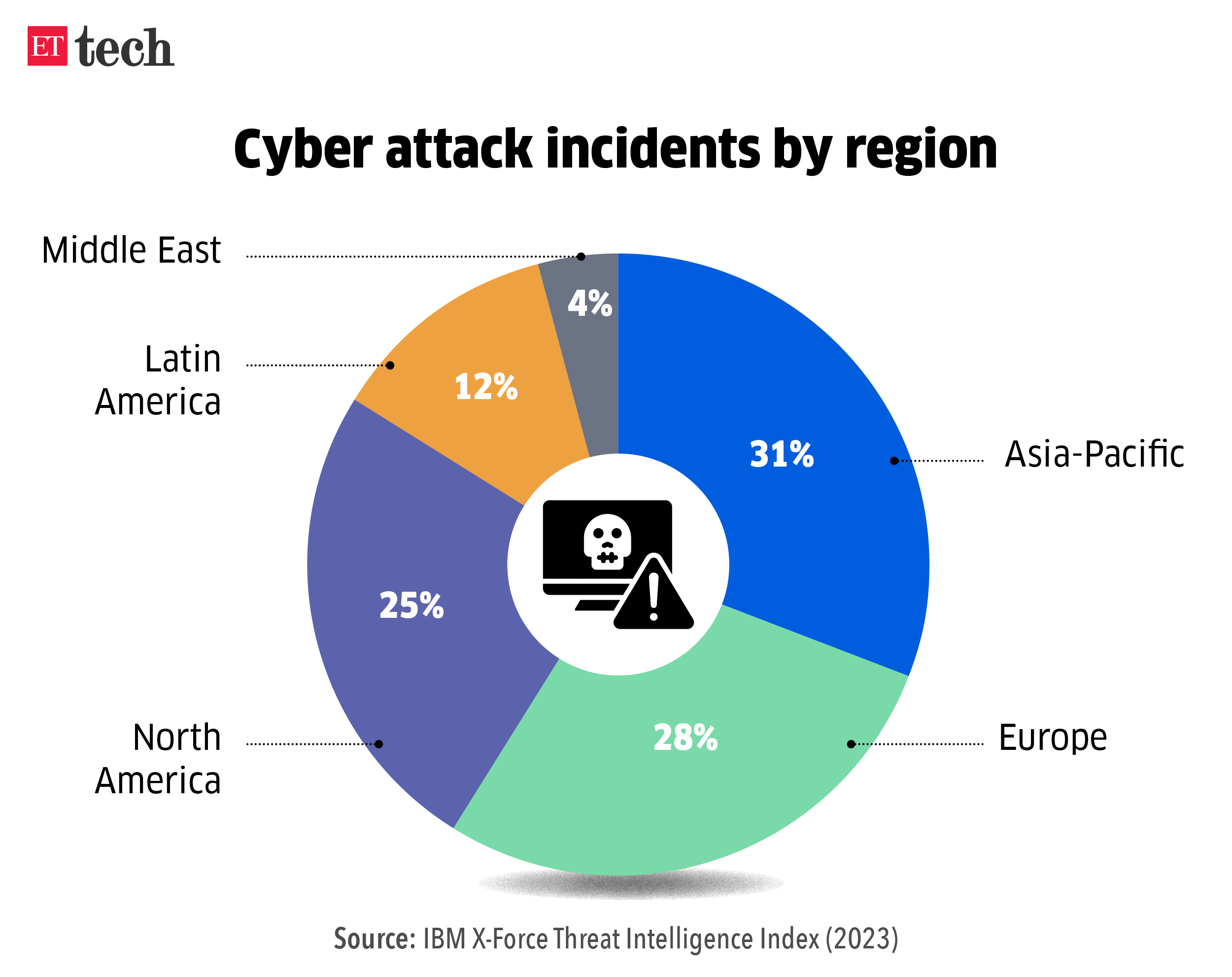

Artificial intelligence (AI) is rapidly transforming the Software as a Service (SaaS) landscape, offering unprecedented opportunities for innovation and efficiency. However, with these advancements come significant risks that must be carefully managed. This playbook provides a comprehensive guide to understanding and governing AI risks in your SaaS environment, ensuring responsible and secure AI adoption.

Introduction to the NIST AI Risk Management Framework (AI RMF 1.0): An Explainer Video | NIST

Understanding the Risks of AI in SaaS

Integrating AI into SaaS applications introduces a range of potential risks, including:

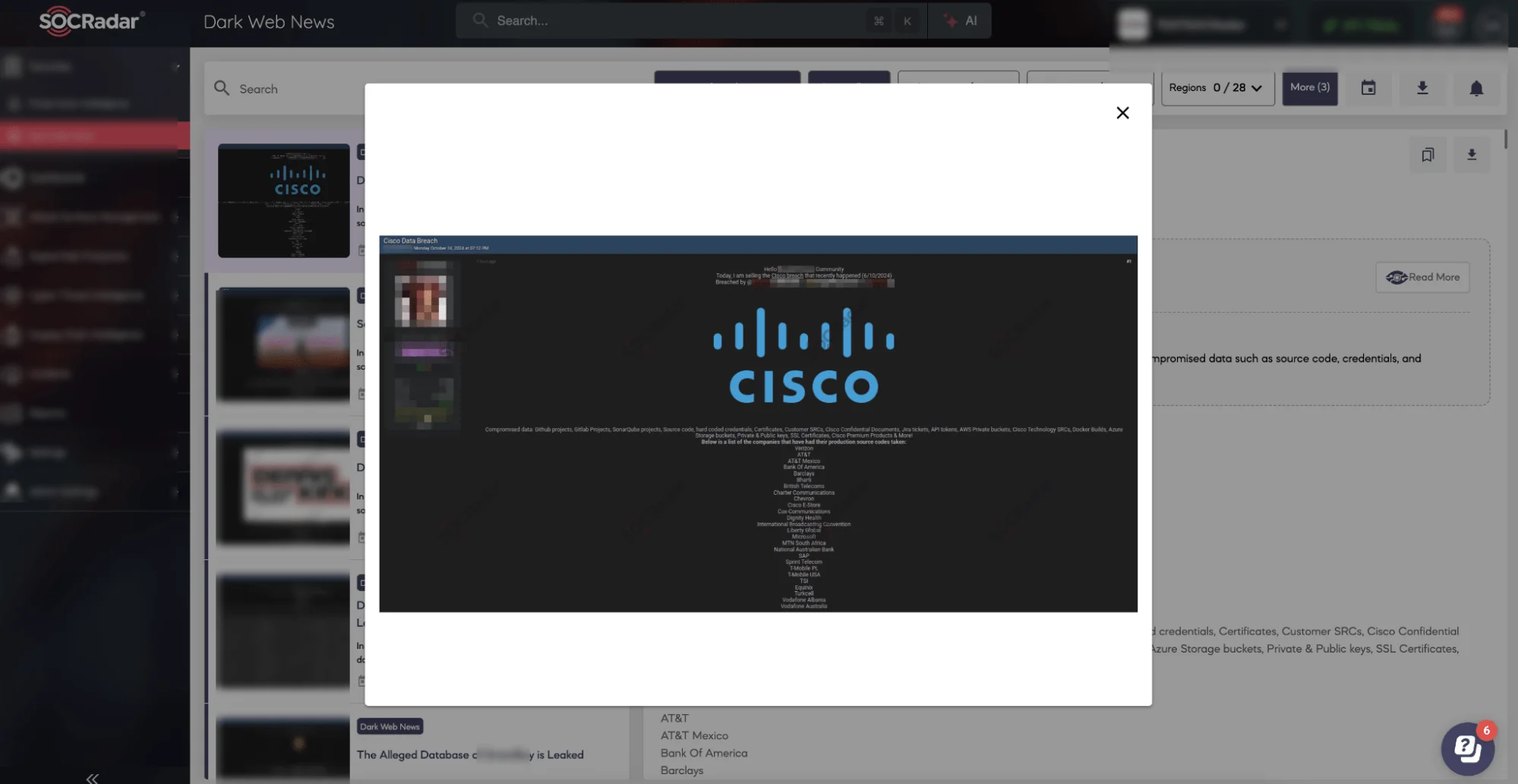

- Data Privacy and Security: AI algorithms often require access to vast amounts of data, raising concerns about data breaches, unauthorized access, and compliance with privacy regulations like GDPR and CCPA.

- Bias and Fairness: AI models can perpetuate and amplify existing biases in data, leading to unfair or discriminatory outcomes.

- Lack of Transparency and Explainability: The "black box" nature of some AI algorithms makes it difficult to understand how decisions are made, hindering accountability and trust.

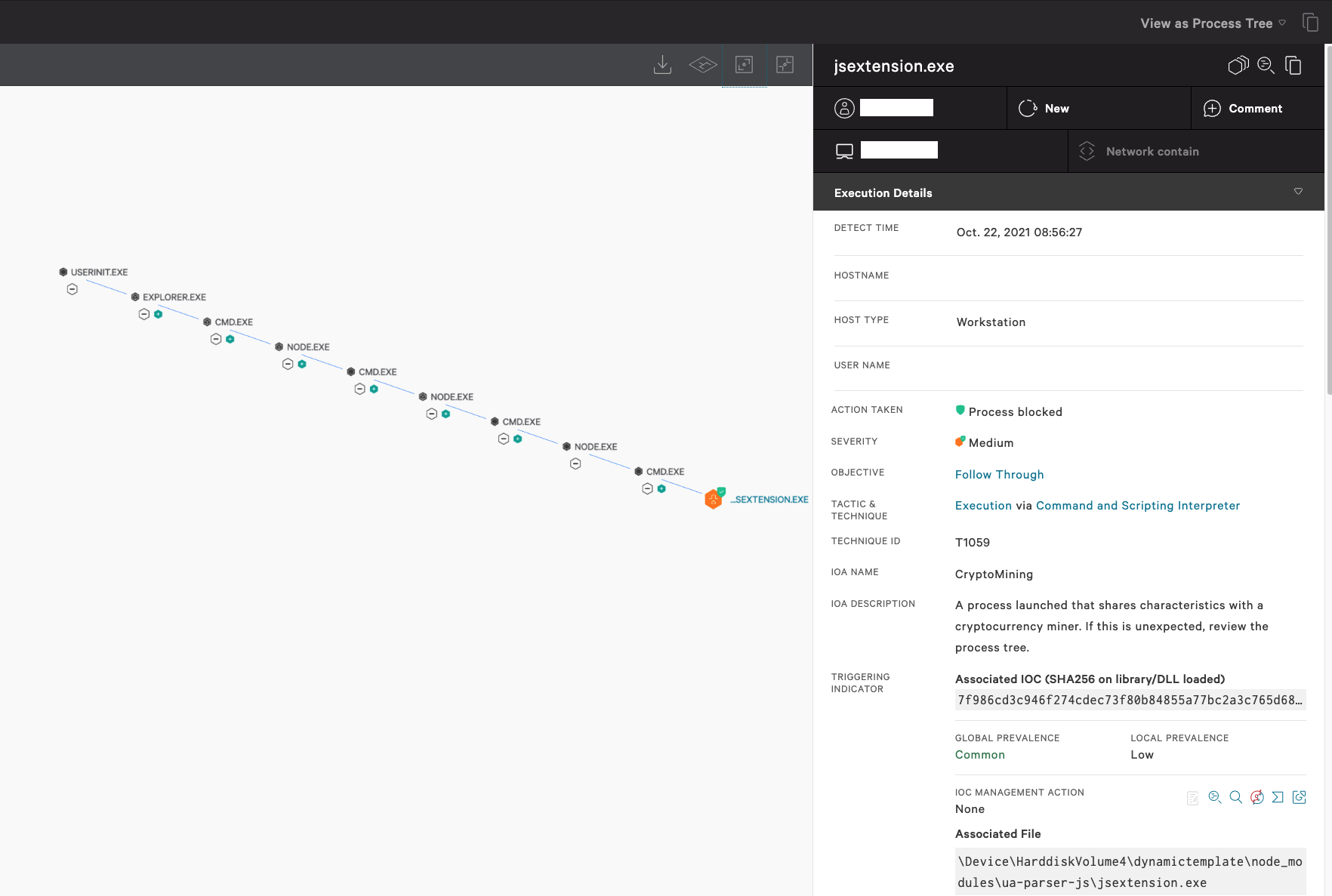

- Model Drift: AI models can degrade over time as the data they are trained on becomes outdated or irrelevant, leading to inaccurate predictions and decisions.

- Third-Party Risk: Many SaaS providers rely on third-party AI services, introducing additional risks related to vendor security, compliance, and performance.

- Shadow AI: The use of unauthorized AI tools and applications within an organization, increasing risk exposure.

Implementing AI Governance in SaaS: A Practical Guide

Effective AI governance is essential for mitigating these risks and ensuring responsible AI adoption in SaaS environments. Here's a step-by-step guide:

- Establish a Governance Framework: Define clear roles, responsibilities, and processes for AI development, deployment, and monitoring.

- Conduct Risk Assessments: Identify and assess potential AI-related risks, considering factors such as data sensitivity, model complexity, and potential impact.

- Develop AI Ethics Guidelines: Establish ethical principles and guidelines for AI development and use, addressing issues such as fairness, transparency, and accountability.

- Implement Data Governance Policies: Ensure data quality, security, and privacy through robust data governance policies and procedures.

- Monitor AI Performance: Continuously monitor AI model performance to detect and address issues such as bias, drift, and errors.

- Provide Training and Awareness: Educate employees about AI risks and governance policies to promote responsible AI practices.

- Use the NIST AI Risk Management Framework: Leverage the NIST AI Risk Management Framework to manage risks. The framework focuses on four core pillars: governance, mapping, measuring, and managing risk.

The NIST AI Risk Management Framework

The NIST AI Risk Management Framework provides a structured approach to managing AI risks. It emphasizes four key functions:

- Govern: Establish a governance structure and policies to oversee AI risk management.

- Map: Identify and document AI systems and their associated risks.

- Measure: Assess the likelihood and impact of AI risks.

- Manage: Implement controls to mitigate AI risks.

Key Takeaways

Managing AI risks in SaaS environments requires a proactive and comprehensive approach. By establishing a robust governance framework, conducting thorough risk assessments, and implementing appropriate controls, organizations can harness the power of AI while minimizing potential risks. The NIST AI Risk Management Framework provides a valuable resource for guiding these efforts.