NIST's AI Cybersecurity Overlays: Managing Risks in the AI Era

NIST's AI Risk Management Framework provides a structured approach to managing cybersecurity risks in AI systems. Learn how these control overlays can help in the responsible development and deployment of AI.

Artificial Intelligence (AI) is rapidly transforming our world, offering incredible opportunities across various sectors. However, with great power comes great responsibility, and in the realm of AI, this translates to managing the inherent cybersecurity risks. Enter the National Institute of Standards and Technology (NIST), which has released control overlays to help organizations navigate these challenges. But what exactly are these overlays, and why should you care?

Understanding NIST's AI Risk Management Framework

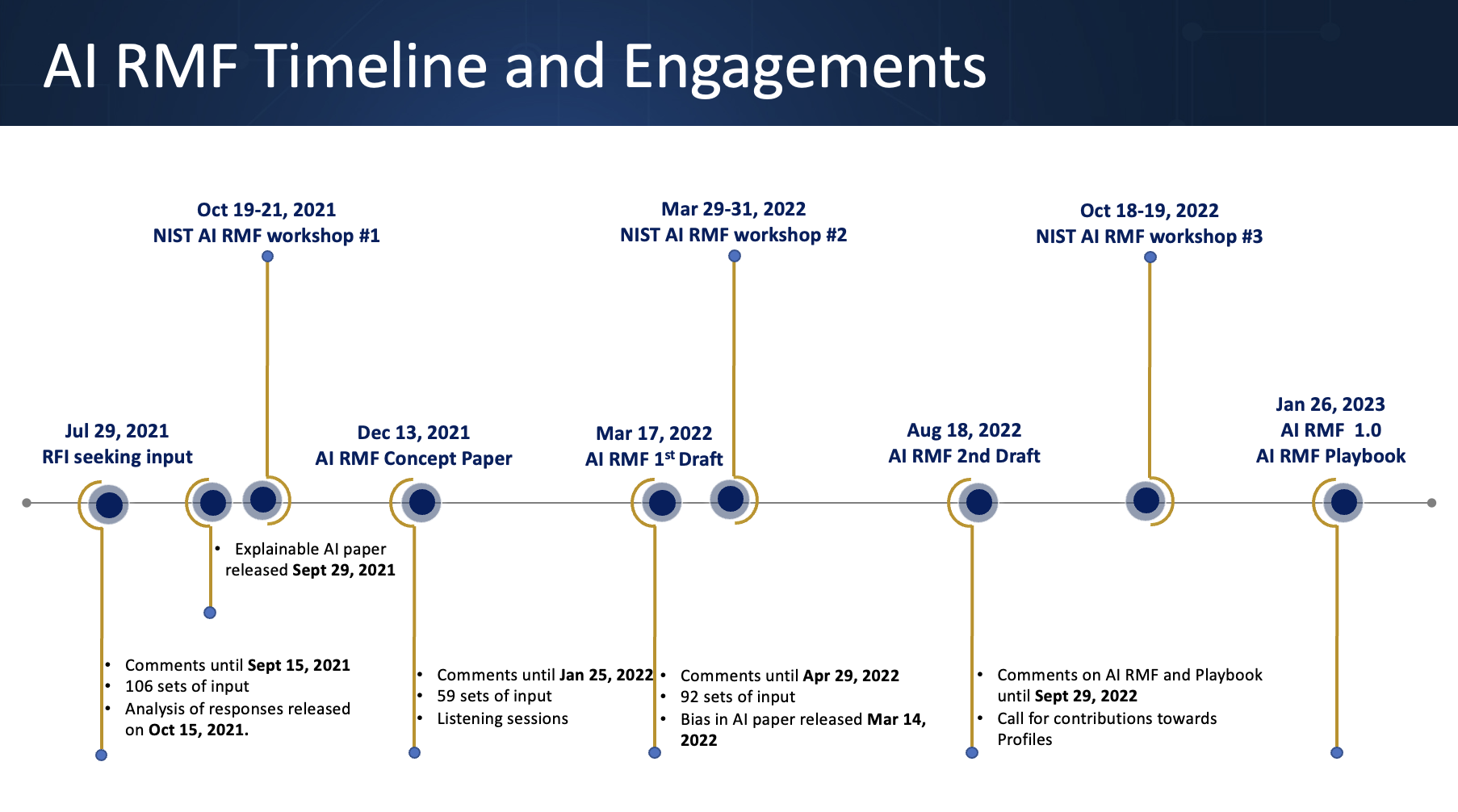

The NIST AI Risk Management Framework (AI RMF) is a comprehensive guide designed to help organizations manage the risks associated with AI systems. Think of it as a structured approach to ensure that AI is developed and deployed responsibly, promoting trust and innovation while safeguarding against potential harms. It’s not just about compliance; it's about building AI systems that are secure, reliable, and aligned with ethical principles.

So, what are these "control overlays" everyone's talking about? Traditional cybersecurity frameworks often fall short when addressing the unique vulnerabilities of AI systems. These overlays are specialized controls that complement existing frameworks, providing targeted guidance for managing AI-specific risks. They address novel attack vectors and help organizations implement robust security measures tailored to their AI applications.

Why Are These Overlays Important?

AI systems introduce new and complex cybersecurity challenges. For example:

- Data Poisoning: Attackers can manipulate the training data used to build AI models, causing them to make incorrect or biased predictions.

- Model Inversion: Sensitive information can be extracted from AI models, compromising privacy and confidentiality.

- Adversarial Attacks: Carefully crafted inputs can fool AI models, leading to errors or malfunctions.

These are just a few examples of the types of risks that the NIST control overlays aim to address. By implementing these controls, organizations can better protect their AI systems from cyberattacks, ensure data integrity, and maintain the confidentiality of sensitive information.

My Take on the Future of AI Security

In my opinion, the release of these control overlays is a critical step towards fostering a more secure and trustworthy AI ecosystem. As AI becomes increasingly integrated into our lives, it's essential that we address the potential risks proactively. NIST's framework provides a solid foundation for organizations to build upon, but it's just the beginning. Continuous monitoring, adaptation, and collaboration will be key to staying ahead of emerging threats and ensuring that AI benefits society as a whole.

What do you think? How will these control overlays affect the way AI systems are developed and deployed in your industry? It's a fascinating and rapidly evolving landscape, and I'm excited to see what the future holds!

References

- NIST Releases New Control Overlays to Manage Cybersecurity ... - GBHackers On Security

- SP 800-53 Control Overlays for Securing AI Systems Concept Paper - NIST

- NIST Unveils Plans for Five AI Cybersecurity Overlays – MeriTalk

- NIST Releases Control Overlays for Securing AI Systems Concept...

- NIST AI RMF - A Structure for the Invisible Risk - LinkedIn

- Implementing the NIST Artificial Intelligence Risk Management ...

- Image Source: NIST AI Risk Management Framework