Risk Assessment Matrix: Templates and Examples - Sembly AI

In an era defined by rapid advancements in artificial intelligence, the question isn't whether AI will transform our world, but how. As AI systems become increasingly integrated into our lives, from healthcare to finance, the potential for both immense benefit and unforeseen harm grows exponentially. Are we truly prepared for the risks lurking beneath the surface of these powerful technologies?

Risk Assessment Matrix: Templates and Examples - Sembly AI

The Imperative of AI Risk Assessment

Imagine a self-driving car making a split-second decision that results in an accident, or an AI-powered hiring tool perpetuating discriminatory biases. These aren't hypothetical scenarios; they're real possibilities that demand our attention. AI risk assessment is not merely a compliance exercise; it's a fundamental ethical responsibility. It's about proactively identifying and mitigating potential harms to ensure that AI benefits all of humanity, not just a select few.

But where do we begin? Fortunately, several frameworks and guides are available to help organizations navigate the complexities of AI risk assessment.

Navigating the NIST AI Risk Management Framework (RMF)

The National Institute of Standards and Technology (NIST) has developed the AI Risk Management Framework (RMF), a structured, flexible guide designed to help organizations manage the risks associated with AI systems. The AI RMF's operational core comprises four interconnected functions:

- Govern: Establishes the overarching culture and ensures compliance with organizational and regulatory standards. This involves defining roles, responsibilities, and policies related to AI risk management.

- Map: Identifies the context, scope, and potential risks associated with specific AI systems. This includes understanding the system's intended use, data sources, and potential impacts on stakeholders.

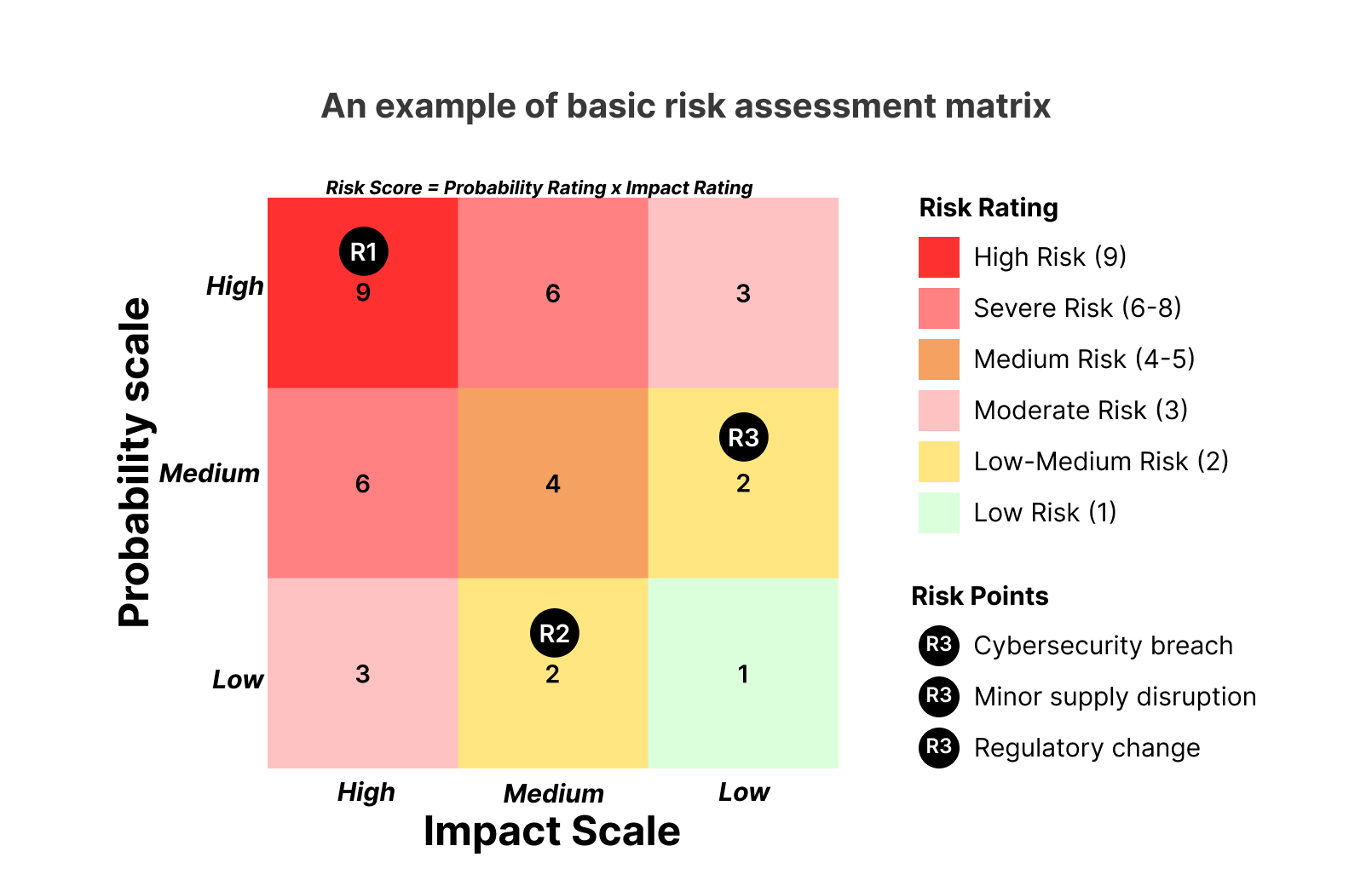

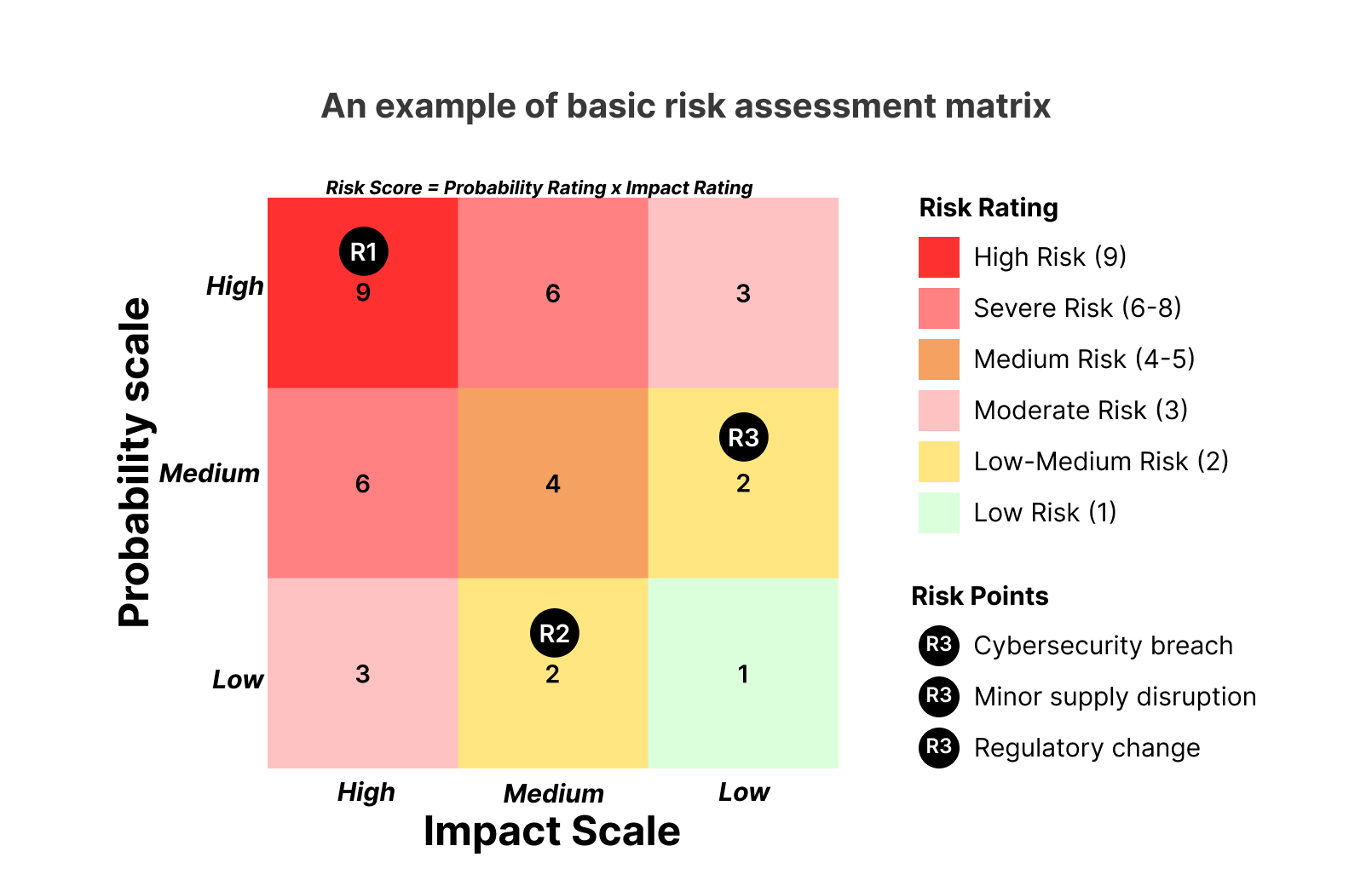

- Measure: Assesses the likelihood and severity of identified risks. This involves using quantitative and qualitative methods to evaluate the potential harms and benefits of the AI system.

- Manage: Implements strategies to mitigate identified risks. This includes developing and deploying safeguards, monitoring system performance, and responding to incidents.

By systematically applying these functions, organizations can proactively identify and address potential risks throughout the AI lifecycle.

Beyond NIST: Other Valuable Resources

While the NIST AI RMF provides a comprehensive framework, other resources can complement your AI risk assessment efforts. Consider exploring:

- OWASP AI Testing Guide: Offers practical guidance for security professionals assessing AI and machine learning platforms.

- CSA AI Controls Matrix: Provides a comprehensive framework designed for trustworthy AI implementation.

Key Takeaways

AI risk assessment is not a one-time event; it's an ongoing process that requires continuous monitoring, evaluation, and adaptation. By embracing a proactive and ethical approach to AI risk management, we can harness the transformative power of AI while minimizing potential harms. The free guides and frameworks discussed here provide a solid foundation for building responsible AI systems. The future of AI depends on our collective commitment to ensuring its safe and ethical development.